Objectives and Assessment

At the completion of this section, you should be able to:

- create clear, concise, and measurable objectives following the ABCD format.

- categorize objectives into the cognitive, affective, or psychomotor domains.

- select precise terms for describing the level of student thinking required using Bloom's Taxonomy as a guide.

- define assessment and describe types appropriate for particular situations.

- define and give examples of formative and summative assessment.

- define and give examples of direct and indirect measures.

- match standards and objectives with assessments.

- describe qualities all assessments should include.

- describe and provide examples of assessments such as checklists, rubrics, and tests.

Begin by viewing the class presentation in Vimeo. Then, read each of the sections of this page.

Explore each of the following topics on this page:

Objectives

Objectives and assessment are interrelated. While objectives state what is expected, assessment provides tools to determine whether the learning outcomes have been reached. Both need to be defined before beginning the process of developing instructional materials.

Objectives and assessment are interrelated. While objectives state what is expected, assessment provides tools to determine whether the learning outcomes have been reached. Both need to be defined before beginning the process of developing instructional materials.

Learning or performance objectives state what you expect participants to be able to do or talk about as a result of the learning experience. They provide a framework for interaction between to the student and teacher by ensuring that everyone is on the "same page" during instruction. They also help teachers plan activities and assessments.

Objectives focus on specific, measurable learning expectations and outcomes. Objectives should be clear, concise, and measurable.

The classic approach to writing objectives is based on the ABCD format:

- A - State the audience, the learner population. Who is the student?

- B - State the behavior that is expected from the audience. What performance do you expect?

- C - Describe the conditions or circumstances surrounding the performance. What are the conditions of the behavior? What will you give the students to facilitate performance?

- D - Identify the degree or amount of behavior required for the performance. How it will be measured? How successful do they need to be?

Example: Given six periodicals (C), the college student (A) will categorize the items into one of three categories (i.e., scholarly, professional, popular) (B) without error (D).

Example: When shown a list of topics (C), the high school freshman (A) will be able to identify the broad and narrow topics (B) 80% of the time (D).

Example: Given a box of different types of yarn (C), the public library workshop participant (A) will select three skeins that would work well for a particular project (B) without choosing inappropriate yarn types (D).

It's sometimes helpful to write out the ABCDs before jumping into creating your objective statement. Explore the following example:

Topic: Primary resources

A - State the audience, the learner population. Who is the student?

First-year, B.A.-seeking history majors taking an Indiana History course with a research paper requirement.

B - State the behavior that is expected from the audience. What performance do you expect?

Students will be able to glean appropriate information from primary source materials.

C - Describe the conditions or circumstances surrounding the performance. What are the conditions of the behavior? What will you give the students to facilitate performance?

After receiving instructor approval of proposed thesis statements, students will meet for one class at a local research library with Indiana-focused special collections for a training session with the instructor and a librarian. Afterwards, students should be able to work with a special collections librarian on their own to identify suitable primary source materials.

D - Identify the degree or amount of behavior required for the performance. How it will be measured? How successful do they need to be?

Students will identify 3 primary sources that lend logical, factual supporting evidence to their thesis statements.

Objective: Given completion of an instructor-approved thesis statement and a research library training session (C), first-year students (A) will be able to locate appropriate primary sources (B) that lend 3 pieces of logical and factual evidence to their arguments of their thesis statements (D).

Why Objectives?

What's the purpose of stating objectives so precisely?

What's the purpose of stating objectives so precisely?

- You need to know what you need to teach (for teaching)

- You need to know what has been accomplished (for testing)

- You need to make students aware of what is expected (for learning)

Objectives are stated in the same manner regardless of the subject area content.

Example: Given CONDITION, the AUDIENCE will BEHAVIOR to DEGREE.

Example: The student will identify the illness or injury of a patient given a list of signs and symptoms 90% of the time.

While proper form dictates that the ABCDs all be including, some instructors make assumptions about materials available or degree of accuracy.

Examples:

- Use the library's online catalog to search for materials by author, title, keyword, or subject.

- Given the database PubMed, the student will correctly apply Boolean logic to locate information.

- Given a web browser, the student will identify major federal government health websites (i.e., NIH, CDC, MCCAM, NLM) and summarize the information they provide.

- Given an information source, the student will create a citation containing the required parts.

Try It!

Try It!

Examine the objectives below and identify the ABCDs.

The student will identify the major bones of the body when given a drawing of a human body 100% of the time.

When given a mannequin, the student will accurately demonstrate four ways to prevent back injuries.

Explore the INCORRECT example below. This instructor has forgotten that objectives should be concise and measurable. Words like "know about" may be useful in goal statements, but not objectives. In addition, the objective should be stated from a student point of view. Finally, be sure that the objective can be measured. You can't measure "educating" or whether something is "important" to a student.

INCORRECT

Knowing about oral history will educate college students about the importance of archiving important events in the lives of members of the community.

Explore a couple examples of revised objectives for this lesson. Notice the ABCDs.

CORRECT

Given interview question guidelines (C), high school students (A) will conduct (B) an oral interview that incorporates at least five of the recommended questions (D).

After an oral history experience (C), high school students (A) will describe (B) three (D) aspects of the experience that were valuable.

The Nature of Learning

When developing objectives, it's essential to consider the specific skill, knowledge, attitude, or disposition. The better you can describe the learning outcome, the easier it will be to design useful learning experiences.

In 1956, Benjamin Bloom along with others published a framework for categorizing learning goals called Taxonomy of Educational Objectives. Bloom identified three major types of learning: cognitive, affective, and psychomotor. As you write objectives, think about the knowledge and skills you want your students to development.

Cognitive (thinking). Focuses on recalling, applying, analyzing, synthesizing, and evaluating information. Learners are able to do some cognitive activity such as apply rules or solve problems. Learn by storing and accessing information. What do I know?

Example: The index is an important part of a nonfiction book because it helps you find information.

Example: Opening the airway is the A in ABCDE.

Affective (feeling). Focuses on appreciations, attitudes, relationships, and values. Learners are able to make informed decisions based on values or priorities. Learn by accessing feelings and understanding emotions. What is the value?

Example: Using the index is a good way to find specific information in a book.

Example: Opening the airway will keep the patient alive.

Psychomotor (doing). Focuses on actions that demonstrate large or small motor skills. Learners are able to achieve a physical result such as operating a piece of equipment. Learn by doing. Kinesthetic movements. What do I do?

Example: I need to go to the back of the book to find the index.

Example: I need to perform the steps in opening the airway.

Students requires proficiency in all three areas. Yet, some students struggle in one or more areas.

Example: The second grade student must RECOGNIZE (cognitive) the need for a book index, APPRECIATE (affective) the important of using the index to locate information in a nonfiction book, and (psychomotor) LOCATE the index in a book.

Example: An EMT must RECOGNIZE (cognitive) the indications for oxygen therapy, APPRECIATE (affective) the level of distress felt by the patient, and ASSEMBLE (psychomotor) an oxygen tank and flow oxygen.

The revised taxonomy (Anderson, 2001) expands the cognitive area to four areas of knowledge:

- Factual knowledge - terminology, specific details and elements

- Conceptual knowledge - classifications and categories, principles and generalizations, theory, models, and structures

- Procedural knowledge - subject-specific skills and algorithms, subject-specific techniques and methods, criteria for determining when to use appropriate procedures

- Metacognitive knowledge - strategic knowledge, knowledge about cognitive tasks, self-knowledge

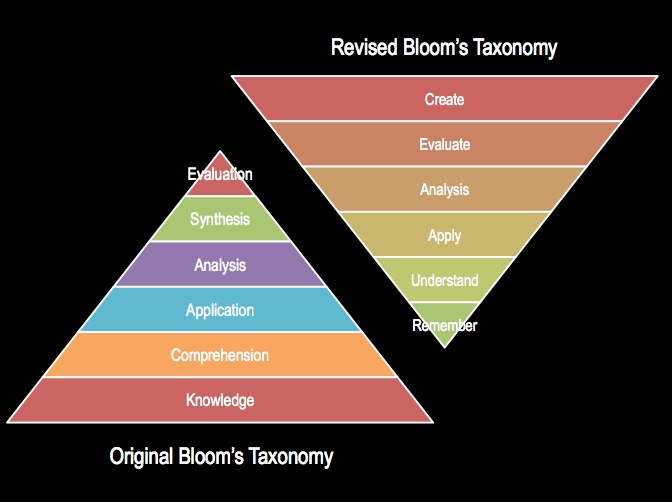

Bloom also developed a taxonomy known as Bloom's Taxonomy, for the cognitive domain that moves from basic memorization activities to higher-level thinking. The framework involves six major categories (Bloom, 201-207):

- Knowledge - recall of specifics and universals, the recall of methods and processes, or the recall of a pattern, structure, or setting.

- Comprehension - the individual knows what is being communicated and can make use of the material or idea being communicated without necessarily relating it to other material or seeing its fullest implications.

- Application - use of abstractions in particular and concrete situations.

- Analysis - breakdown of a communication into its constituent elements or parts such that the relative hierarchy of ideas is made clear and/or the relations between ideas expressed are made explicit.

- Synthesis - putting together of elements and parts so as to form a whole.

- Evaluation - judgments about the value of material and methods for given purposes.

In 2001, a revised version of Bloom's Taxonomy was released by Anderson. Some of the words have been modified.

- Remember - recognize, recall

- Understand - interpret, exemplify, classify, summarize, infer, compare, explain

- Apply - execute, implement

- Analyze - differentiate, organize, attribute

- Evaluate - check, critique

- Create - generate, plan, produce

Blanchett, Powis, and Webb (2012) note that although we want students to work at the upper levels of the taxonomy, the lower levels provide a critical foundation for the higher-levels of thinking.

"At the lower levels, teaching activities may include simply transmitting information to learners, whether by a lecture, podcast, handout or online resource. You will usually find that in order for learners to progress, some kind of interaction (known as 'transaction') needs to take place between the teacher and learner (or between the learners themselves). This may involve questions, tests, or activities. In order to reach the highest levels of learning, the knowledge needs to be 'transformed' by each individual learner - this means that the learner must understand for themselves and make their own sense and meaning of the knowledge. As a teacher, you can guide learners to this point through questioning to encourage reflection." (Blanchett, Powis, & Web, 2012, 24)

Thinking Words

Sometimes it's useful to have a list of words to general ideas. Use the following table for ideas.

Knowledge |

Comprehension |

Application |

Analysis |

Synthesis |

Evaluation |

| define | convert | change | analyze | categorize | appraise |

| describe | defend | compute | break down | combine | conclude |

| identify | distinguish | construct | categorize | compile | criticize |

| label | estimate | demonstrate | compare | compose | critique |

| list | explain | manipulate | diagram | create | defend |

| match | generalize | modify | differentiate | devise | evaluate |

| outline | give an example | operate | distinguish | design | interpret |

| recall | infer | produce | divide | generate | judge |

| recognize | interpret | relate | illustrate | organize | justify |

| retell | paraphrase | show | infer | plan | rate |

| select | predict | solve | relate | revise | summarize |

| state | summarize | use | separate | write | support |

Try It!

Try It!

Which is your strongest domain: cognitive, affective, or psychomotor? What about your students?

Where are the strengths and weaknesses of your students in terms of the domains: cognitive, affective, psychomotor?

Assessment

Assessment is the process of gathering, measuring, analyzing, and reporting data on a students' learning. It helps teachers determine how much children learned and how well they learned it.

Assessment is the process of gathering, measuring, analyzing, and reporting data on a students' learning. It helps teachers determine how much children learned and how well they learned it.

Assessment has become increasingly important in both K-12 and higher education settings over the past decade. Radcliff (2007) and others suggest two factors in the interest in assessment at the higher educational level. First, there has been an increasing emphasis on accountability. Second, is a growing interesting in creating measures that go beyond traditional grades. An increasing emphasis on learner-centered teaching dictates new ways to think about assessment.

Radcliff (2007) and others stress that information literacy assessment occurs at three levels:

- Classroom Assessment - based on specific classes and tied to class learning objectives such as an information literacy course or a discipline-specific course

- Programmatic Assessment - based on the learning goals of a program of study connected with a particular discipline such as teacher education or nursing

- Institutional Assessment - based on the board goals of information literacy across the institution connected with accrediting bodies

According to Blanchett, Powis, and Webb (2012), when planning assessment ask three questions:

- What do I want to measure?

- Is this the best way to assess?

- Is what I am testing important or significant?

Assessment can also be used to determine the effectiveness of the instruction. Interacting with students about their performance can become an integral part of the learning process.

In contrast, evaluation involves judging the quality of student work or instruction. For example, it may include a final score or grade.

Assessment is the key to determining whether learners have met the expectations set forth in the objectives for the particular learning experiences as well as the standards established by recognized agencies such as ACRL and AASL.

Read!

Read!

Read How Should We Measure Student Learning? The Many Forms of Assessment from Edutopia.

Think about the assessments used in the classroom when you were growing up. Which assessment were most useful for you and your teachers? What assessment do you prefer as an instructor? Why?

Designing Assessments

In designing assessments, instructors must be balance the desire for a comprehensive assessment plan with a realistic approach to course management. Although it would be helpful for students to receive detailed feedback on every assignment, it may not be realistic given class sizes.

Before designing specific assessments, consider the following questions:

- Which learning objectives will be assessed?

- Will assessment be formative (during the learning experience with student feedback) or summative (at the end of the experience to measure learning)?

- Will assessments be norm-referenced (against peer standards) or criterion-referenced (based on established criteria)?

- Will assessments be authentic (based on real-world situations and experiences)?

- Will assessments be varied including a variety of strategies?

- What types of assessments will be most effective, efficient, and appealing?

- How will assessments be weighed?

- How will students submit their work?

- How will students access their grades and feedback?

Read!

Read!

Read A Faceted Taxonomy for Rating Student Bibliographies in an Online Information Literacy Game by Chris Leeder, Karen Markey, and Elizabeth Yakel (2012).

Notice their process for creating the assessment.

Peer Assessment and Review

If you plan to incorporate a peer evaluation component, consider the following:

- Will students get credit for their job as a peer reviewer? How will this be evaluated?

- What criteria will students use in their peer review?

- Will these reviews be made to the individual student or as part of a discussion that others can view?

- Are these formative reviews that can be used by students to improve their work prior to final project submission or are they summative reviews

Formative and Summative Assessment

Two types of assessment must be considered: Formative and Summative.

Formative Assessment

Formative assessment occurring during the instructional experience to gauge what students are learning. They are used to provide feedback to the learner about their progress and to adjust instructional materials to improve the learning experience.

Formative assessment occurring during the instructional experience to gauge what students are learning. They are used to provide feedback to the learner about their progress and to adjust instructional materials to improve the learning experience.

Formative assessment is used while materials are being developed to assist in revision and improvement.

Practice exercises, classroom response systems, and student reflections can all be used as part of formative assessment.

Objective: Given an information source such as book, periodical article, or website, the student will be able to identify the citation elements for this format without error.

Practice Exercise: Students move from station to station in the classroom working with each of six different items representing different formats. For each item, they must select from a list of citation elements.

Summative Assessment

Summative Assessment is a final check of student performance used to determine whether objectives have been reached. This might include a standardized test, final exam, survey, or reflection.

Summative assessment is used to evaluate instruction that has concluded.

Direct and Indirect Measures

Unfortunately, librarians don't always have the flexibility to require exams or assign grades. However there are still many ways to determine whether participants are information literate. These tools include direct and indirect assessments.

Direct Measures

When possible, consider authentic assessments that reflect student skills. Products and performances such as a student report, annotated bibliography, public service announcement, journal, or sample search can all be examined to determine whether students possess specific skills.

Indirect Measures

Often, librarians use indirect information to judge student competency. Data from surveys and observations can be used to draw inferences about student capabilities.

Assessment in Courses

Establish an environment where quality is expected and students are aware of the expectations.

Consider the following ideas:

- Clearly state requirements such as due dates, posting requirements, and quality indicators.

- Build requirements for spelling, grammar, and mechanics into your guidelines.

- Use a variety of assessment approaches.

- Get students involved with assessment.

A quality assessment program should address the following four questions:

- Valid. Does it measure what is intended?

- Reliable. Does it consistently produce the same result?

- Fair. Is it free from bias?

- Comprehensive. Does it provide enough variety to address the needs of diverse learners?

Assessing Class Participation

While some instructors provide a vague item called "participation" in their course syllabus, it's recommended that course developers identify specific assessment criteria to evaluate student performance.

One popular approach is to require a minimal level of participation. For instance, you might require students to post an assignment or provide another type of significant contribution.

If the discussion prompt requests a single, correct answer, the discussion will end with the first posting. Avoid this by requiring a unique example or solution. Or, asking a higher level question. Turn a knowledge level question into an evaluation level question.

These student postings will then generate questions, ideas, suggestions, and feedback from other students. Students are then required to post one or more responses to other students.

Provide Specific Guidelines for Assessment

Be sure to provide specific guidelines for assessment. These guidelines may serve as the basis for all course discussions or be specifically connected to individual discussion items.

Example 1: Discussion Activity Assessment

The following "Sample Activity Assessment" was developed by Annette Lamb and Larry Johnson for their online courses.

Two points are possible on postings. One point is given for adequately addressing the specific requirements of the activity and posting it in the appropriate location. One point is given for providing an insightful posting with concrete, vivid examples. Your posting should cause classmates to think, react, investigate, question, laugh, or cry. Okay, maybe not laugh or cry, but at least stop and think, "that's interesting"... Quality postings contain some of the following characteristics:

- References the professional literature (texts, websites, supplemental reading, additional relevant materials located by the student)

- Concise and on target (100 to 250 words), but detailed enough for understanding and meaningful application to the issue addressed

- Raises an area of inquiry or an issue in a clear manner for further discussion or debate

- Recommends a resource which helps a fellow student gain more understanding on an issue or topic

- Summarizes information as evidence that either validates (supports) or suggests a different perspective (counters) and the information is referenced; such information may or may not agree with the poster's personal opinion

- Links together several postings to suggest a conclusion, a recommendation, a plan or a broader observation that what has been previously posted on the issue or topic

- Messages are on a frequent basis across the semester so that they interact with messages from other classmates and are not bunched for delivery.

One point is given for adding at least one response on the assignment thread. These can be added to the discussion of your posting or the posting of another student. It is suggested that you go back and read through the comments and suggestions added to your posting, but you are not required to respond specifically these comments. Below you'll find examples of the kinds of "responses" that will be counted. Feel free to "get into" the discussion with as many comments to your peers as you'd like. However to receive your 1 response point, be sure that your response is insightful and will help others in their learning.

- Act on a suggestion. For example, after reading a comment from a peer, you might decide to add an example, suggest a website address or other resource, answer a question, or clarify an idea.

- Provide feedback to others such as a specific comment or idea along with an example, expansion, or suggestion. In other words, "way to go Susie" is a good start, but won't get you a point. You could even start with "that's crap Susie", however the key is providing positive, constructive criticism or helpful and encouraging advice. Healthy debate is fine, but let's discourage mean-spirited comments.

- State an opinion and provide supportive evidence or arguments. This can be fun because it can really get a discussion going.

- Add an insight. If you've had an encounter with the topic being discussed, it would be valuable to hear your thoughts and "real world" experiences. This should be more than "I'll use the idea in class." How and why will you use the idea? Would the idea work in another area? How or why?

Example 2: Weekly Discussion Rubric

The following "Sample Grading Rubric for Online Discussion" was developed by Debbie King at Sheridan College (Palloff & Pratt, 2003, p. 91-92).

Level of Participation During One Week

Participation will be based on levels of thinking reflected in your weekly threaded discussions.

- Minimum number of postings not met

- Minimums met; all discussions on Level I

- Minimums met; at least one example of discussion above Level I

- Minimums met; at least one example of discussion above Level I with at least one above Level II

- Minimums met; at least two examples of discussion above Level I with at least one above Level III

Evaluation of Levels of Thinking in Weekly Threaded Discussions

Critical Thinking

- Level I: Elementary Clarification: Introduce a problem; pose a questions; pass on information without elaboration

- Level II: In Depth Clarification: Analyze a problem; identify assumptions

- Level III: Inference: Make conclusions based on evidence from prior statements; generalizing

- Level IV: Judgment: Express and opinion about a conclusion or the relevance of an argument, theory, or solution

- Level V: Strategy: Propose a solution; outline requirements for its implementation

Information Processing

- Level I: Surface: Repeat information; make a statement without justification; suggest a solution without explanation

- Level II: In Depth: Bring in new information; show links, propose a solution with explanation; show evidence of justification; present a wider view

Skills

- Level I: Evaluation: Questions your ideas or approach to a task; for example, "I don't understanding..."

- Level II: Planning: Show evidence of steps needed and prediction of what is likely to happen; for example, "I think I should..."

- Level III: Regulation: Show evidence of implementing a strategy and assessing progress; for example, "I have done..."

- Level IV: Self Awareness: For example, "I believe..." or "I have found...."

Matching Standards and Objectives with Assessments

It's important to match standards and objectives with assessments.

When designing questions, use the following guidelines (Allen & Babbie, 2008):

- Develop clear, consist questions.

- Avoid use of negative terms such as not.

- Be culturally sensitive.

- Avoid biased language.

- Avoid leading questions.

In the article The case for graphic novels, Hoover (2012) describes how the use of graphic novels and graphic nonfiction can be associated with information literacy standards. Although the examples below include ACRL standards, they could be adapted for AASL standards:

ACRL Standard 3.2.B. Analyzes the structure and logic of supporting arguments or methods.

Student Action. Use concepts from Understanding Comics to interpret the meaning of comic-specific devices such as panels, closure, and motion lines in American Born Chinese.

ACRL/IRIG 3.2.D. Explores representations of gender, ethnicity, and other cultural identifiers in images.

Student Action. Evaluate the use of animals to represent ethnic groups in Maus.ACRL 4.2.A. Evaluates how effectively an image achieves a specific purpose.

Student Action. Describe the use of iconic abstraction in Bone, and explain the effect Smith's choices have on a reader's ability to identify with Phoney Bone.

ACRL 4.2.C. Assesses the appropriateness and impact of the visual message for the intended audience.

Student Action. Speculate on the intended impact of the full panel bleed on p. 31 of The Photographer on the reader. Describe their reaction to it and explore any differences or similarities to alternative interpretations.

In a study focusing on the development of online assessment tools, questions were matched with content-area and information literacy standards. Staley, Branch, and Hewitt (2010) developed an online tool for assessment of college student learning for discipline-based library instruction that associated ACRL standards as well as subject-area learning outcomes. They found that learning outcomes improved more in some areas than others. They recommend teaching fewer concepts and using digital learning objects to address basic research skills.

Examine the following examples from Staley, Branch, and Hewitt (2010):

| Question | Social Sciences Learning Outcomes | ACRL Standards / Performance Indicators | APA Learning Goals |

|---|---|---|---|

| 1. Imagine you have an assignment to write a paper based on scholarly information. Which would be the most appropriate source to use? | Understand the difference between popular and scholarly literature | Articulate and apply initial criteria for evaluating both the information and its sources | Use selected sources after evaluating their suitability based on appropriateness, accuracy, quality, and value of the source |

| 2. How can you tell you are reading a popular magazine? | Understand the difference between popular and scholarly literature | Articulate and apply initial criteria for evaluating both the information and its sources | Use selected sources after evaluating their suitability based on appropriateness, accuracy, quality, and value of the source |

| 3. What is the name of the linking tool found in SJSU databases that may lead you to the full text of an article? | Determine local availability of cited item and use Link+ and interlibrary loan services as needed | Determine the availability of needed information and makes decisions on broadening the information seeking process beyond local resources | Demonstrate information competence and the ability to use computers and other technology for many purposes. |

| 4. In considering the following article citation, what does 64(20) represent? Kors, A. C. (1998). Morality on today's college campuses: The assault upon liberty and dignity. Vital Speeches of the Day, 64(20), 633-637. |

Identify the parts of a citation and accurately craft bibliographic references. | Differentiate between the types of sources cited and understand the elements and correct syntax of a citation for a wide range of resources | Quote, paraphrase and cite correctly from a variety of media sources |

| 5. In an online database which combination of keywords below would retrieve the greatest number of records? | Conduct database searches using Boolean strategy, controlled vocabulary and limit features | Construct and implement effectively-designed search strategies | Formulate a researchable topic that can be supported by database search strategies |

| 6. If you find a very good article on your topic, what is the most efficient source for finding related articles? | Follow cited references to obtain additional relevant information | Compare new knowledge with prior knowledge to determine the value added | Locate and use relevant databases…and interpret results of research studies |

| 7. What is an empirical study? | Distinguish among methods used in retrieved articles | Identify appropriate investigative methods | Explain different research methods used by psychologists |

| 8. Which area of the SJLibrary.org web site provides a list of core databases for different student majors? | Identify core databases in the discipline | Select the most appropriate investigative methods or information retrieval systems for accessing the needed information | Locate and choose relevant sources from appropriate media |

| 9.What does the following citation represent: Erzen, J. N. (2007). Islamic aesthetics: An alternative way to knowledge.Aesthetics and Art Criticism, 65 (1), 69-75. |

Identify the parts of a citation and accurately craft bibliographic references. | Differentiate between the types of sources cited and understands the elements and correct syntax of a citation for a wide range of resources | Identify and evaluate the source, context and credibility of information |

| 10. If you are searching for a book or article your library does not own, you can get a free copy through: | Determine local availability of cited item and use Link+ and Interlibrary Loan services as needed | Determine the availability of needed information and makes decisions on broadening the information seeking process beyond local resources | Locate and choose relevant sources from appropriate media |

| 11. How would you locate the hard-copy material for this citation? Erzen, J. N. (2007). Islamic aesthetics: An alternative way to knowledge.Aesthetics and Art Criticism, 65 (1), 69-75. |

Search library catalog and locate relevant items | Uses various search systems to retrieve information in a variety of formats | Locate and use relevant databases…and interpret results of research studies |

Library Instruction Assessment

A consistent approach to assessment is essential to improve library instruction.

The Association of College and Research Libraries identified assessment and evaluation as an important elements of information literacy best practices (ALA, 2003).

Knight (2002) suggests that developing and implementing a plan for library user education assessment can lead to the development of good practices.

In A Guide to Teaching Information Literacy, Blanchett, Powis, and Webb (2012, 32-33) suggest that all assessments contain five qualities:

- Validity - they should test the learning outcomes

- Reliability - they should replicable on more than one occasion

- Efficiency - they should be a good use of time

- Fairness - they should considerate of individual differences

- Value - they should examine what is most important

Examine the Sample Assignment from De Montfort University.

Examine the Sample Assignment from De Montfort University.

Think about the objectives that might be associated with this assignment.

Think about the assessment tools that would be used to evaluate this assignment.

How could you adapt this assignment for a different situation?

Selecting Assessment Tools

Assessment requires instructors to evaluate student performance in an accurate and valid manner.

Assessment requires instructors to evaluate student performance in an accurate and valid manner.

There are dozens of different assessment tools that can be adapted to fit the needs of your students. These include anecdotal reports, checklists, conferencing, conversations, journals, peer assessment, portfolios, progress reports, quiz, rubrics, self assessment, scored discussion, and test.

O'Malley and Pierce (1996) provided examples of assessments that could be observed and documented:

- Oral interviews

- Story or text retelling

- Writing samples

- Projects and exhibitions

- Experiments and demonstrations

- Constructed-response text items

- Teacher observations

- Portfolios

Authentic assessments are those that ask learners to perform in ways that demonstrate real-world applications or are meaningful beyond the learning situation. O'Malley and Pierce (1996) suggested the following characteristics of authentic assessments:

- Constructed responses

- Higher-order thinking

- Authenticity

- Integrative

- Process and Product

- Depth in Place of Breadth

In Mixing and matching: assessing information literacy, McCulley (2009) notes that authentic assessment of student learning is able to measure cognitive, behavioral, and affective levels of learning. She found that intentional use of these assessments are valuable across disciplines. McCulley identified three areas of assessment for information literacy:

- Knowledge Tests and Surveys. These assess both cognitive and affective elements. A test question might determine whether a student can use truncation and a survey item might check student confidence.

- Informal Assessments. These assess through observation and interactions with students. The instruct may watch students as they conduct searches or discuss findings.

- Performance Assessments. These assess student competence through contextual activities related to specific tasks such as steps in the research process. Instructors may examine student products such as bibliographies, reports, or concept maps.

McCulley (2009, 179) stresses that

"Performance assessments are the most authentic assessment because they require students to demonstrate that they can integrate and apply what they have learned. Other assessments, such as tests, surveys, and informal assessments, also play an important role in improving learning and teaching through providing snapshots of student learning during library sessions, building confidence, encouraging reflection, and making students active learners."

Gilchrist and Zald (2009, 168) suggest asking a series of questions when designing through assessment.

- Outcome. What do you want the student to be able to do?

- Information Literacy Curriculum. What does the student need to know in order to do this well?

- Pedagogy. What type of instruction will best enable the learning?

- Assessment. How will the student demonstrate the learning?

- Criteria for Evaluation. How will I know the student has done this well?

Try It!

Try It!

Complete Assessment, Evaluation, and Curriculum Redesign the free, online workshop from Educational Broadcast Corporation.

Think about your preferences in terms of assessment.

Checklist

A checklist is a list of criteria that can be used as a guide for project development and assessment. It often includes items for checking both process and product.

Often called a criterion-referenced checklist , it defines a set of performance criteria for addressing a learning objective. Enough detail must be provided so that students understand the expectation. Points are often awarded for meeting each item on the list.

Useful in ensuring completeness and consistency in tasks, teachers can reduce failure by helping a task become routine. The key to using checklists is to match the list to the objective. Then, provide students with the checklist during learning. In this way, they can anticipate the required elements. You may even wish to add explanation to assist the student in learning.

Some instructors use a ratings scale with their checklist. This is a continuum of numbers such as 1 being low or unacceptable and 5 being high or outstanding.

Example. A checklist is used to ensure students follow the process of evaluating web resources.

Example. A checklist is used to determine whether library staff are able to use the laminator correctly.

Concept Maps

Concept maps provide a graphic representation of student understanding. Although they can be time-consuming to create, concept maps provide useful information about what students consider to be key ideas and organization.

Concept maps provide a graphic representation of student understanding. Although they can be time-consuming to create, concept maps provide useful information about what students consider to be key ideas and organization.

For assessment, concept maps are useful for concepts that involve processes and relationships.

Example. Students might be asked to visualize the process of conducting a search or how two databases compare.

Example. Students might create a Venn Diagram comparing and contrasting two characters in a book like Pink and Say by Patricia Polacco.

Conferencing

Conferencing involves discussing progress and performance with students. A set of questions or a report may guide the conference meeting. Discussions can be formal or informal. Conferencing often provides specific feedback and guidance for students.

Student conferencing is a way to check student progress and also provide one-on-one assistance for learners. Guild (2003) conducts research conferences with students as they work through the inquiry process. Two of her conversation guides are outlined below with the standard questions she raises.

- What best describes the kind of relationship you are investigating:

- Cause and effect

- Application of a concept

- Influence

- Comparison

- Other

- Cause and effect

- What is the most exciting discovery you have made so far?

On the back of this paper, draw a simple concept map using the results of your background reading. Include major people, places, concepts, and relationships that you have been able to identify.

- Using the concept map as a guide, briefly state what your thesis question is currently.

- What is confusing in the research you have conducted so far?

- [Adding to her list] What is reassuring in the research you have conducted so far?

Conferencing about Questions, Arguments and Sources

- State the focus of your paper and list the topic areas that relate to it.

- On the back of this sheet, draw a simple concept map of your developing thesis. Be clear what kind of relationship(s) organize(s) your thesis.

- What new questions have you developed as a result of your research in supporting your thesis?

- What part of your argument is weakest?

- What resource so far has been the best for information? Why?

- What information are you looking for that you have not been able to find?

Example. A conference is used to check student progress on an oral history project.

Focus Groups

Focus groups are small, face-to-face groups of five to twelve people exploring a particular topic such as their experience taking a library workshop. A moderator guides the conversation.

Example. A focus group might be used to find out what participants learned during a small business seminar at the public library.

Interviewing

Conducting interviews is an effective way to collect information from students. They can be conducted face-to-face, by phone, or even through an email conversation.

Radcliff (2007) and others identified three types of interviewing for assessment purposes:

- Informal interviewing - the researcher uses a conversational approach without predetermined questions. Instead the questions are generated by the context of the conversation.

- Guided interviewing - the researcher uses a list of topics as a starting point for questions.

- Open-ended interviewing - the researcher uses pre-determined questions. With this approach, validity and reliability can be established.

In preparing for an interview, Radcliff (2007) suggests the following questions:

- What do you want your respondents to tell you?

- Have you considered the time frame and order of your questions?

- Are your questions worded properly?

- How will you record your responses?

- How will you enlist your respondents?

- When and where will the interview be conducted?

- Who will interview them?

Example. Interviews can help to determine what students gained from your instruction. Some sample questions include:

- Do you think a Google search or a research database search would contain more authoritative information? Why?

- What steps would you use to organize information for a social issues debate?

- How would you go about citing the Skype conversation with a NASA expert?

Journaling

Journaling involves students in recording their progress and observations. Teachers often use the journals to track student progress and design mini-lessons to address specific concerns or needs.

Example. Students select a Wikipedia article to read. They are asked to evaluate three of the original sources cited in the article. Their job is to keep a journal of their adventure in locating these original sources. What's the process of finding and evaluating these sources?

Observations

Observation is useful as both an informal assessment during instruction as well as a summative tool. Instructors might watch students use a database or listen to a small group discussion about evaluation techniques.

Observation is useful as both an informal assessment during instruction as well as a summative tool. Instructors might watch students use a database or listen to a small group discussion about evaluation techniques.

As an informal assessment, observations might include informal questions to check student understanding or stimulate conversations. They may also include self-reflection activities to help the instructor in providing additional materials or useful feedback.

Radcliff (2007) and others suggest the following tips for making information observations:

- Be comfortable and familiar with what is being observed

- Identify what you will observe

- Limit how much you will observe

- Record key observations in key situations

In addition, Radcliff (2007) and others suggest basing observations on instructional goals and communicating effectively through clear and concise questions that make students comfortable.

As a formal assessment, observations can be useful in determining how students behave in "real-world" situations.

Example. Observers often use checklists as they observe. Is the new staff member able to operate the scanner? Is the child able to use the self-check out machine without assistance?

Matrix

Creating a matrix is an effective, informal assessment. Students are asked to identify characteristics, organize features, or make comparisons related to a concept. Students might place features or characteristics on the left side of the page and concepts to be compared across the top.

Example. Students place the characteristics of journals down the left side of a page. Then, create a column for scholarly publications and popular publications. Students would then check whether the particular feature such as "peer review" matched the scholarly and/or popular publications. This approach provides a quick way to determine whether students understand the concept. Students can then compare their approaches, lists of features, and choices.

Muddiest Point

Angelo and Cross (1993) use the muddiest point technique to gain immediate feedback from students. Students are asked to describe the most important thing they learned in class. Then, students are asked to write down they questions they still have about the topic or something they don't understand.

This informal assessment provides great information for the instructor that can be used to clear up misconceptions or provide corrective feedback.

Example. A student might write that they learned that "capital letters are ignored in Google". Then, they might state that they don't understand how the asterisk (*) should be used in a search. At the beginning of the next session, the instructor might review the use of the asterisk in a Google search.

One Sentence Summary

Another effective informal assessment is called one sentence summary. Angelo and Cross (1993) suggest that students summarize their knowledge of a specific concept in one sentence. Like the "muddiest point" this technique is a great way to check student understanding and provide corrective feedback. It's also a great way for students to review learning and synthesize information into a concise statement.

Example. A student might write that "the purpose of the copyright law is to protect the interests of authors and encourage the creation and sharing of new knowledge." The instructor may have students compare answers and identify differences and misunderstandings.

Performance Assessments

Performance assessments focus on what students can demonstrate through processes (i.e., using a software package, evaluating a website) or products (i.e., presentations, speeches, written reports, video productions). Observing a student's performance or examining their products provides useful insights into their knowledge and skills. These assessments are useful in evaluating higher order thinking skills.

Example. The student will create a series of blog posting tracing their experience searching for information about a social issues topic. These postings will be assessed using a rubric.

Example. High school students will do a skit about cyberbullying for a sixth grade class. A checklist will be used to ensure that they've included the required information about cyberbullying.

Portfolios

A portfolio is a thoughtful, annotated collection of student work. Portfolios can serve many purposes. Although they are often used as a cumulating activity, they can also be used to trace student progress.

A portfolio is a thoughtful, annotated collection of student work. Portfolios can serve many purposes. Although they are often used as a cumulating activity, they can also be used to trace student progress.

In some cases, a portfolio includes items dictated by the instructor. In a learner-centered classroom, students select and annotate those items they select. Sometimes the instructor provides guidelines or requirements, however students make the selections.

A portfolio generally contains a checklist of items included, the items themselves, and reflections or descriptions written by the student.

Electronic Portfolios are a creative means of organizing, summarizing, and sharing artifacts, information, and ideas about teaching and/or learning, along with personal and professional growth. The reflective process of portfolio development can be as important as the final product. In many cases, they are used as part of faculty and student evaluation along with other assessment tools such as standardized tests. A portfolio is a sampling of the breadth and depth of a person's work conveying the range of abilities, attitudes, experiences, and achievements.

Rubrics

A rubric is a scaled set of criteria defining a range of performances. It describes successful performance. Indicators are often written for novice, apprentice, and expert levels.

Rubrics describe student competencies using two dimensions: criteria and level of performance. A table is used to format these two elements with the criteria down the left column and the levels of performance across the page.

- Criteria. Identify the specific indicator or part of the performance.

- Level of Performance: Identify the range of performance from high to low, competency to not competency, or exemplary to lacking.

Example:

- Indicator: Integrated new information into one's own knowledge

- Basic: Put information together without processing it

- Proficient: Integrated information from a variety of sources to create meaning and is relevant to own prior knowledge and draws conclusions.

- Advanced: Integrated information to create meaning that connects with prior personal knowledge, draws conclusions, and provides details and supportive evidence.

- Indicator: Distinguished among fact, point of view, and opinion.

- Basic: Copied information as given and tends to give equal weight to fact and opinion as being evidence.

- Proficient: Used both facts and opinion, but labels them without a paraphrased use of the evidence.

- Advanced: Linked current, documented facts and qualified opinion to create a chain of evidence to support or reject an argument.

Example:

| Proficient | Developing | Unacceptable | |

| Provides a thesis statement | Provides complete statement including a clear, concise, and arguable position that is well-developed | Provides basic statement with an arguable position | Provides weak statement, lacking arguable position |

Read!

Read!

Read one of the following articles:

Belanger, Jackie, Zou, Ning, Mills, Jenny Rushing, Holmes, Claire, Oakleaf, Megan (October 2015). Project RAILS: Lessons learned about rubric assessment of information literacy skills. portal: Library and the Academy, 15(4), 623-644.

OR

Jastram, Iris, Leebaw, Danya, Tompkins, Heather (April 2014). Situating information literacy within the curriculum: using a rubric to shape a program. portal: Libraries and the Academy, 14(2), 165-186.

OR

Lowe, M. Sara, Booth, Char, Stone, Sean, Tagge, Natalie (July 2015). Impacting information literacy learning in first-year seminars: a rubric-based evaluation. portal: Libraries and the Academy, 15(3), 489-512.

Rubrics are particularly useful in dealing with complex behaviors that involve many components. Tasks that involve the creation of products, demonstration of real-world skills, or development of authentic documents work well with rubrics.

From a student perspective, rubrics are helpful in providing direct feedback showing specific areas in need of improvement. This approach makes scores more meaningful.

From a teacher perspective, rubrics help breakdown complex skills into manageable pieces making assessment easier. They also eliminate bias in grading by providing easily defendable, precise statements.

When building rubrics, carefully consider key differences between performance levels that emphasize quality over quality. Keep the wording clear, concise, consistent, and positive at all levels. Use detailed descriptions that provide useful feedback for students.

In their 2009 study titled Information literacy rubrics within the disciplines, Fagerheim and Shrode reported on the development of rubrics for assessing information literacy in chemistry and psychology. They found student scores clustering at the high end of the measure and found less variation in scores than expected. However, they note that "rubrics offer a promising and important method to assess the information literacy skills of today's students" (2009, 164).

Examples from the rubrics developed for their chemistry information literacy assessment rubric by Fagerheim and Shrode (2009) are shown below. Click to see the full-sized example.

Go to Information Literacy/Critical Thinking Skills Evaluation Criteria from Temple for more ideas.

Try It!

Try It!

Go to Rubristar.

Explore rubrics.

Make your own.

Surveys

Surveys are a common assessment tool. Pre- and post-test surveys are often used to assess student readiness and determine changes in student competence levels.

Radcliff (2007) and others suggest a number of steps for creating effective surveys:

Radcliff (2007) and others suggest a number of steps for creating effective surveys:

- Define the survey objectives

- Write the survey items

- Construct the survey

- Conduct a pretest

- Prepare a letter or message of transmittal

- Conduct the initial distribution and follow-up

- Track responses

- Compile responses

- Determine what to do about nonrespondents

- Share results with respondents

Tests

Quizzes, tests or examinations are all popular form of assessment. Traditional testing situations often call for simple sentence completion activities. Move your testing to a higher level by asking students to synthesize information and draw conclusions.

Ideas to increase the thinking required in testing situations:

- Incorporate case studies or scenarios into testing situations

- Provide data to analyze

Read!

Read!

Read Assessment in the One-Shot Session: Using Pre- and Post-tests to Measure Innovative Instructional Strategies among First-Year Students by Jacalyn E. Bryan and Elana Karshmer (2013).

Test Preparation

It's helpful to provide students with assistance when preparing for testing situations.

Review. Provide a review guide with key ideas and resources. Explore examples of study and review materials

- Biology - study materials assignments and solutions

- Psychology - study materials

Practice. Provide practice questions, sample tests, and answer keys. Explore examples of practice tests:

- Biology - practice exams and solutions

Quizzes are a quick way to check student knowledge against objectives. Explore some sample quizzes. Think about how you can adapt the questions for your own purposes:

- Info Skills from University of East London

- Writing Quiz from De Montfort University

Many online tools exist for creating quizzes.

Example. To go Quia Library Quizzes to explore examples.

Test Development

When designing a test, match the type of test item with the learning outcome and course content. Options include:

True/False. Students identify an item as true or false.

- Pros - Works well for knowledge level content and checking for misconceptions; easy to write; good for either/or content

- Cons - Poor for checking high level thinking; Difficult to discriminate; need many questions for high reliability

- Tips - Avoid double negatives and words like only, always, never, generally; avoid direct quotes from course materials

Matching. Students match items.

- Pros - Works well for knowledge level content; good for large amount of material; problems & solutions, parts & wholes, terms & definitions, causes & effects, tools & uses; events & dates

- Cons - Poor for checking high level thinking; focuses on recognition

- Tips - Provide good directions; keep items short; use like topics and structure; organize in logical order

Multiple Choice. Students identify the correct answer.

- Pros - Works well for all knowledge content; versatile; effective & efficient; fewer guesses than T/F; broad range of content; good for facts, concepts and procedures

- Cons - Difficult to construct good questions and effective distracters

- Tips - Provide a simple, clear stem that could be answered without the options; randomly present options; make items independent of each other; provide plausible distracters; avoid double negatives, all or none of the above, always/never

Short Answer. Students construct a short response.

- Pros - Works well for all knowledge content; minimal guessing; good for definitions; easy to create, high level thinking; emphasis is on recall rather than recognition

- Cons - Time consuming to score; only suitable for questions with short responses

- Tips - Provide specific criteria; phrase question for a single answer

Essay. Students construct a a long response. Essay questions can come in two formats: Closed and Opened.

Example: Discuss the difference between primary and secondary sources.

Example: Sally used a peer-to-peer file-sharing service. Is this a form of plagiarism or not?

- Pros - Works well for all knowledge content; easy to build; flexible

- Cons - Time consuming to score; can be subjective; limited coverage

- Tips - Provide specific criteria and task; use checklist for scoring

Consider the following elements when writing test items:

- Match assessments to objectives.

- Focus on essential questions and key concepts.

- Be clear and concise.

- Be unambiguous.

- Avoid terms such as not and often.

- Avoid "all of the above" or "none of the above" or "A & B but not C".

- Avoid what students would consider "trick questions".

- Be culturally sensitive.

- Use the appropriate reading level.

- Stick to three to five options.

- Provide clear directions for the test.

Test Administration

Testing can be a problem in online courses. Think about how the tests will be administered. Options include:

- Closed Book, Monitored. Students may be monitored during a special, face-to-face session. This approach works well for online courses, however it can cause scheduling problems. Some online instructors simply ask students to find an adult who will supervise their examination. This adult would signed a form indicating that the student was monitored.

- Open Book/Note. This approach works well for online courses. Students are allowed to go back over course materials as they wish. Many instructors do not allow students to ask assistance from fellow students or others. However if students are at remote sites, monitoring their access to peers can be a problem.

- Open Testing. This approach allows students to access course materials and even classmates during a testing situation. The advantage of this approach is that it eliminates "cheating." All sources are available. This approach is useful when the purpose of the test is simply to be sure students can answer the questions.

Pre-tests and Post test

Many instructor give pre-tests and post tests. Pre-tests are intended to check student entry skills and prior knowledge about the topic. Post tests are used to determine whether the students acquired new skills as a result of instruction.

Jackson (2006) used an interactive, web-based tutorial titled Plagiarism: The Crime of Intellectual Kidnapping to provide instruction and check student understanding. The tutorial included a pre- and posttest. Students' understanding improved from the pre- to the posttest.

Try It!

Try It!

Read Plagiarism instruction online: assessing undergraduate students' ability to avoid plagiarism. Notice how the quizzes were developed as part of the instruction.

Examine the Plagiarism: The Crime of Intellectual Kidnapping tutorial, pre- and posttest.

Think about how surveys, quizzes and tests can be incorporated into online tutorials.

Try It!

Try It!

Read Classroom Assessment Techniques from Vanderbilt University for lots of ideas.

Go to Quizzes for question ideas related to information inquiry topics.

Use tools such as SurveyMonkey as an easy way to administer a quick quiz to check student understandings.

Standardized Tests

Tests can be time-consuming to prepare. Consider using or adapting an existing resources that is aligned with national standards. Larson, Izenstark, and Burkhardt (2010) adapted an existing proficiency exam for use in assessing student learning in library instruction course. They found that the exam was aligned with the ACRL standards and that their students performed well after taking the course.

A few standardized assessment tools have been developed for information literacy.

- iSkills is a fee-based assessment administered by the Educational Testing Service.

- Madison Assessment was developed by James Madison University. It is now distributed by license, but you can check out the demos.

- Project SAILS Information Literacy Assessment was developed by Kent State for higher education. The service is fee-based.

- Research Readiness Self-Assessment was developed by Central Michigan University. This interactive tool helps users "identify, locate, evaluate and use information resources". Email to demo the service.

- TRAILS: Tool for Real-time Assessment of Information Literary Skills was developed by Kent State. This tool focuses skills based on 3rd, 6th, 9th, and 12th grade standards. The service is free.

Try It!

Try It!

Go to TRAILS: Tool for Real-time Assessment of Information Literary Skills.

Explore their service.

Try the demos at one of the other services.

Read!

Read!

Read Research Methods (PDF) from the Museums, Libraries and Archives Council to explore the strengths of different approaches to assessment.

Read!

Read Standardised library instruction assessment: an institution-specific approach by Shannon Staley, Nicole Branch, and Tom Hewitt (2010).

Manage Assessment

Inform learners of the plan for assessment. Monitor student performance throughout the course and provide feedback about progress.

Continuous Assessments

Rather than relying on a single measure of success such as a test, continuous assessment provides repeated opportunities for the learner to reflect on their progress.

Involve learners in self-assessments:

- ungraded activities built into course materials

- practice activities and sample exams

- key point reviews and self-check lists

- informal communications with the instructor and peers

In addition, continuous assessment also requires the instructor to check learner understandings.

The learner frequently demonstrates and applies what they've learned through:

- completing an assignment

- participating in a discussion

- answering questions

The instructor provides:

- ongoing encouragement

- critical feedback

- suggestions for improvement.

Feedback should be timely and informative. For instance, rather than simply assigning points, the instructor might indicate that the posting "provided excellent examples" or "lacked the required citations."

Bluemle, Makula, and Rogal, M. W. (2013) implemented a new performance assessment model for library instruction at the college level. High level thinking information literacy skills and assessments were woven into a three course sequence.

Feedback and Grades

Many course management systems provide a gradebook for posting comments and student grades.

Notes for instructors:

- Some course management systems automatically calculate grades. Be sure you've set up the system to reflect your grading system.

- Some systems allow the instructor to control access to grades, check the setup.

- Keep a backup of all grades.

Consider methods for providing students with information about their performance.

- Comments in the course online gradebook

- Email message with checklist and comments

- Commenting function in word processing documents such as Microsoft Word

- Discussion function in a wiki

Resources

Allen, R. & Babbie, E. R. (2008). Research Methods for Social Work. Thomson Brooks/Cole.

American Library Association. (2003). Characteristics of Programs of Information Literacy that Illustrate Best Practices: a Guideline. American Library Association. Available: http://www.ala.org/acrl/standards/characteristics

Anderson, Lorin W. (2001). A Taxonomy for Learning, Teaching, and Assessing : a revision of Bloom's taxonomy of educational objectives. Acorn.

Angelo, Thomas A. & Cross, K. Patricia (1993). Classroom Assessment Techniques: A Handbook for College Teachers. 2nd Edition. Jossey-Bass Higher and Adult Education.

Belanger, Jackie, Zou, Ning, Mills, Jenny Rushing, Holmes, Claire, Oakleaf, Megan (October 2015). Project RAILS: Lessons learned about rubric assessment of information literacy skills. portal: Library and the Academy, 15(4), 623-644.

Blanchett, Helen, Powis, Chris, & Jo Webb (2012). A Guide to Teaching Information Literacy: 101 Practical Tips. Facet Publishing.

Bloom, Benjamin (1956). Taxonomy of Educational Objectives.

Bluemle, S. R., Makula, A. Y., & Rogal, M. W. (2013). Learning by doing: Performance assessment of information literacy across first-year curriculum. College & Undergraduate Libraries, 20(3-4) 298-313.

Chartered Institute of Library and Information Professionals. Information Literacy. Available: http://www.cilip.org.uk/get-involved/advocacy/information-literacy/pages/default.aspx

Dick, Walt, Carey, Lou, and Carey, James O. (2011). The Systematic Design of Instruction. Seventh Edition. Pearson.

Fagerheim, Britt A., Shrode, Flora G. (2009). Information literacy rubrics within the disciplines. Communications in Information Literacy, 3(2), 158-170.

Gilbert, Julie K. (2009). Using assessment data to investigate library instruction for first year students. Communications in Information Literacy, 3(2), 181-192.

Gilchrist, D. & Zald, A. (2008). Instruction and program design through assessment. In C. Cox & E. B. Lindsay (eds.), Information Literacy Instruction Handbook. ACRL.

Jackson, P. A. (September 2006). Plagiarism instruction online: assessing undergraduate students' ability to avoid plagiarism. College & Research Libraries, 67(5), 418-428. Available: http://crl.acrl.org/content/67/5/418.full.pdf+html

Jastram, Iris, Leebaw, Danya, Tompkins, Heather (April 2014). Situating information literacy within the curriculum: using a rubric to shape a program. portal: Libraries and the Academy, 14(2), 165-186.

Knight, Lorrie A. (2002). The role of assessment in library user education. Reference Services Review, 30(1), 15-24.

Larsen, Peter, Izenstark, Amanda, & Burkhardt (2010). Aiming for assessment: notes from the start of an information literacy course. Communications in Information Literacy, 4(1).

Lowe, M. Sara, Booth, Char, Stone, Sean, Tagge, Natalie (July 2015). Impacting information literacy learning in first-year seminars: a rubric-based evaluation. portal: Libraries and the Academy, 15(3), 489-512.

McCulley, Carol (2009). Mixing and matching: assessing information literacy. Communications in Information Literacy, 3(2), 172-180.

O'Malley, J.M.& Pierce, L.V. (1996). Authentic Assessment for English Language Learning: Practical Approaches for Teachers. Addison-Wesley Publishers.

Ostenson, Jonathan (January 2014). Reconsidering the checklist in teaching Internet source evaluation. portal: Libraries and the Academy, 14(1), 33-50.

Radcliff, Carolyn J., Mary Lee Jensen, Joseph A. Salem Jr., Kenneth J. Burhanna, and Julie A. Gedeon (2007). A Practical Guide to Information Literacy Assessment for Academic Librarians. Westport, CN: Libraries Unlimited.

Staley, Shannon M., Branch, Nicole A. & Hewitt, Tom L. (September 2010). Standardised library instruction assessment: an institution-specific approach. Information Research, 15(3). Available: http://informationr.net/ir/15-3/paper436.html